3_logistic_regression_with_numpy.ipynb

0.05MB

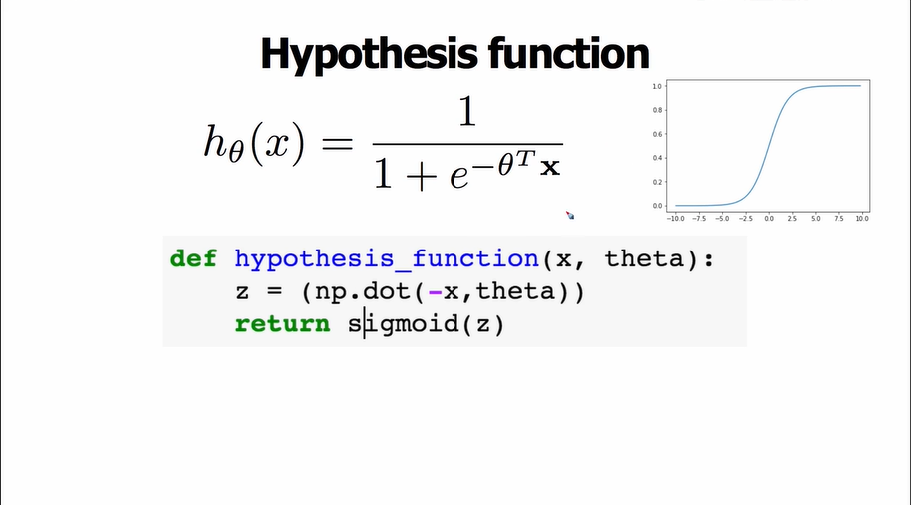

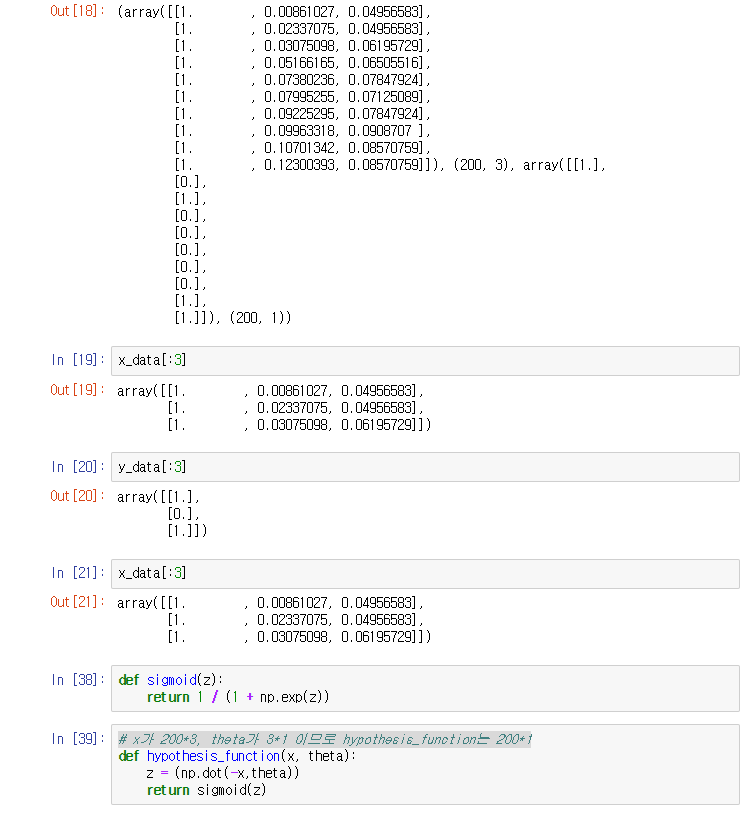

#x가 200*3, theta가 3*1 이므로 hypothesis_function는 200*1

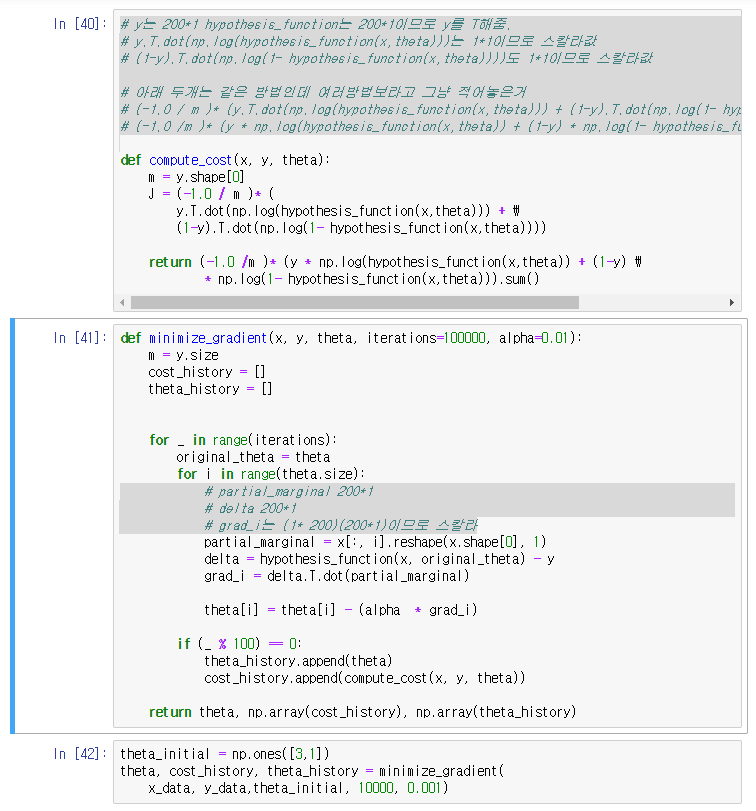

# y는 200*1 hypothesis_function는 200*1이므로 y를 T해줌.

# y.T.dot(np.log(hypothesis_function(x,theta)))는 1*1이므로 스칼라값

# (1-y).T.dot(np.log(1- hypothesis_function(x,theta))))도 1*1이므로 스칼라값

# 아래 두개는 같은 방법인데 여러방법보라고 그냥 적어놓은거

# (-1.0 / m )* (y.T.dot(np.log(hypothesis_function(x,theta))) + (1-y).T.dot(np.log(1- hypothesis_function(x,theta))))

# (-1.0 /m )* (y * np.log(hypothesis_function(x,theta)) + (1-y) * np.log(1- hypothesis_function(x,theta))).sum()

# partial_marginal 200*1

# delta 200*1

# grad_i는 (1* 200)(200*1)이므로 스칼라

'C Lang > machine learing' 카테고리의 다른 글

| 9-6. Logistic regresion with sklearn (0) | 2019.05.15 |

|---|---|

| 9-5. Maximum Likelihood Estimation (0) | 2019.05.13 |

| 9-3. logistic regression cost function (0) | 2019.05.08 |

| 9-2. Sigmoid function (0) | 2019.05.08 |

| 9-1. logistic regression overview (0) | 2019.05.08 |